正文

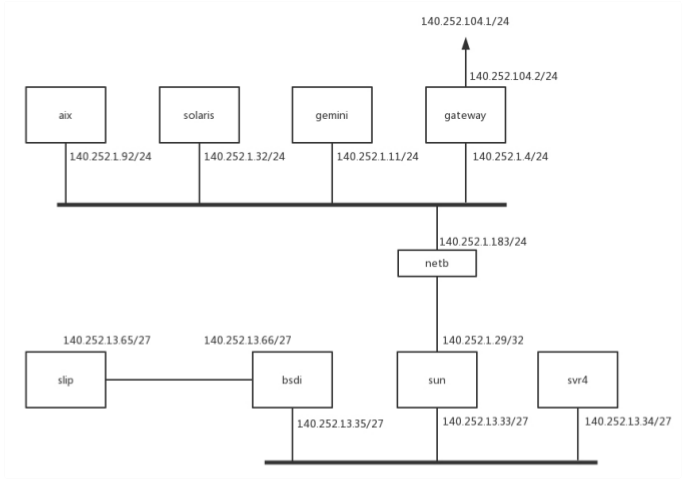

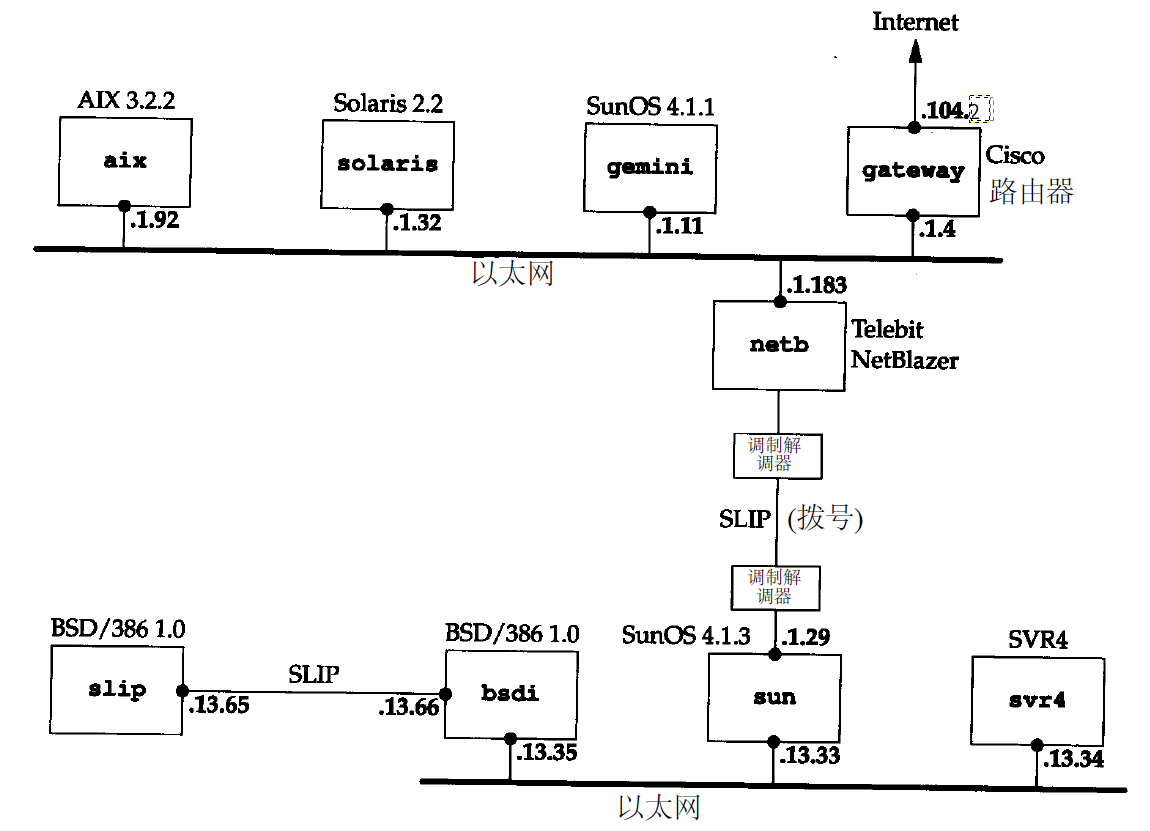

这里借鉴一下《趣谈网络协议》中的TCP/IP实验环境的搭建。一个是虚拟机,一个是虚拟网络环境。虚拟机用docker创建,虚拟网络环境用openvswitch搭建。

docker安装

docker安装,以管理员身份运行:

sudo su

Docker 的旧版本被称为 docker,docker.io 或 docker-engine 。如果已安装,请卸载它们

apt-get remove docker docker-engine docker.io

更新 apt 包索引

apt-get update

安装 apt 依赖包,用于通过HTTPS来获取仓库

apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common

或者如下输入来安装 apt 依赖包

apt-get -y install apt-transport-https ca-certifcates curl gnupg-agent software-properties-common

没有 -y的命令也可以执行,系统会提示你是否安装,输入y,回车,就会安装了。

apt-get -y install 这个指令则是跳过系统提示,直接安装。

添加 Docker 的官方 GPG 密钥

curl -fsSL https://download.docker.com/linux/ubuntu/gpg > gpg

apt-key add gpg

或者如下添加 Docker 的官方 GPG 密钥

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

密钥指纹 9DC8 5822 9FC7 DD38 854A E2D8 8D81 803C 0EBF CD88 ,通过搜索指纹的后8个字符,验证您现在是否拥有带有指纹的密钥

apt-key fingerprint 0EBFCD88

使用以下指令设置稳定版仓库

add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

或者如下指令设置稳定版仓库

add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

安装 Docker Engine-Community,第一步更新 apt 包索引

apt-get -y update

第二步使用apt-cache madison列出软件版本的所有来源

apt-cache madison docker-ce

第三步 选择18.06.0~ce~3-0~ubuntu版 Docker Engine-Community 安装

apt-get -y install docker-ce=18.06.0~ce~3-0~ubuntu

这样下来docker就安装好了。

Open vSwitch安装

apt-get -y install openvswitch-common openvswitch-dbg openvswitch-switch python-openvswitch openvswitch-ipsec openvswitch-pki openvswitch-vtep

Bridge安装

apt-get -y install bridge-utils

arping安装

apt-get -y install arping

准备docker镜像

拉取镜像版本:

docker pull hub.c.163.com/liuchao110119163/ubuntu:tcpip

你可以查看本机准备的docker镜像有哪些:

docker images

启动整个环境

把shell脚本拉取到本地:

git clone https://github.com/popsuper1982/tcpipillusrated.git

cd tcpipillusrated

chmod +x setupenv.sh

查看本机网关:

ip addr

输出:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s25: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 50:7b:9d:62:fe:7c brd ff:ff:ff:ff:ff:ff

inet 192.168.44.151/24 brd 192.168.44.255 scope global noprefixroute dynamic enp0s25

valid_lft 26597sec preferred_lft 26597sec

inet6 fe80::e8b7:c38e:b752:14ec/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: wlp4s0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 30:52:cb:70:9f:23 brd ff:ff:ff:ff:ff:ff

4: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 2e:57:cc:13:5f:bc brd ff:ff:ff:ff:ff:ff

5: net2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 0e:72:c6:ce:fb:4d brd ff:ff:ff:ff:ff:ff

6: net1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 1a:b1:8b:e9:36:4e brd ff:ff:ff:ff:ff:ff

7: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:7b:2f:06:38 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:7bff:fe2f:638/64 scope link

valid_lft forever preferred_lft forever

在里面找到net出口网关,比如这里是 enp0s25。

镜像是 hub.c.163.com/liuchao110119163/ubuntu:tcpip。

把这两个参数带入运行shell脚本:

./setupenv.sh enp0s25 hub.c.163.com/liuchao110119163/ubuntu:tcpip

这样,整个环境就搭建起来了,所有的容器之间都可以 ping 通,而且都可以上网。

现在有个问题,就是电脑关机重启后,这些container都处于Exited状态,如何一起全部重启呢?

查看有哪些docker容器

docker ps

把这些docker的id都记下来,然后一次全部重启,如下:

docker restart 835a1b493dc7 e31b1dc4c8e1 d88b1259001a 922d2fa513ae 64cce49d1b2e 69259c6aee43 16e3eccefd21 b33dddfa001c b0dc636cf6ef

但是发现配置的网络环境都异常了,看样子需要重新运行一次。

先把这些container都停止运行:

docker stop 835a1b493dc7 e31b1dc4c8e1 d88b1259001a 922d2fa513ae 64cce49d1b2e 69259c6aee43 16e3eccefd21 b33dddfa001c b0dc636cf6ef

清理掉所有处于终止状态的容器

docker container prune

看一下ip 配置:

ip addr

看一下里面有哪些是openvswitch添加的虚拟网络:

ovs-vsctl list-br

发现net1、net2,这两个新增的网桥还在,需要停止并删除:

ip link set net1 down

ip link set net2 down

ovs-vsctl del-br net1

ovs-vsctl del-br net2

然后再查看网关,这两个地址就不在了。

接下来再运行一次shell脚本重新载入一次container就好了,方式见上。

tcpipillustrated项目

tcpipillustrated的github项目中有7个文件:

Dockerfile

Openvswitch实验教程.pptx

README.md

pipework

proxy-arp

proxy-arp.conf

setupenv.sh

Openvswitch实验教程.pptx 是一个ppt文件,重点讲了Openvswitch相关的内容。

README.md 内容只有项目名tcpipillustrated这一行字。

setupenv.sh脚本

我们看一下setupenv.sh这个脚本的内容:

#!/bin/bash

publiceth=$1

imagename=$2

#预配置环境

systemctl stop ufw

systemctl disable ufw

/sbin/iptables -P FORWARD ACCEPT

echo 1 > /proc/sys/net/ipv4/ip_forward

sysctl -p

/sbin/iptables -P FORWARD ACCEPT

#创建图中所有的节点,每个一个容器

echo "create all containers"

docker run --privileged=true --net none --name aix -d ${imagename}

docker run --privileged=true --net none --name solaris -d ${imagename}

docker run --privileged=true --net none --name gemini -d ${imagename}

docker run --privileged=true --net none --name gateway -d ${imagename}

docker run --privileged=true --net none --name netb -d ${imagename}

docker run --privileged=true --net none --name sun -d ${imagename}

docker run --privileged=true --net none --name svr4 -d ${imagename}

docker run --privileged=true --net none --name bsdi -d ${imagename}

docker run --privileged=true --net none --name slip -d ${imagename}

#创建两个网桥,代表两个二层网络

echo "create bridges"

ovs-vsctl add-br net1

ip link set net1 up

ovs-vsctl add-br net2

ip link set net2 up

#brctl addbr net1

#brctl addbr net2

#将所有的节点连接到两个网络

echo "connect all containers to bridges"

chmod +x ./pipework

./pipework net1 aix 140.252.1.92/24

./pipework net1 solaris 140.252.1.32/24

./pipework net1 gemini 140.252.1.11/24

./pipework net1 gateway 140.252.1.4/24

./pipework net1 netb 140.252.1.183/24

./pipework net2 bsdi 140.252.13.35/27

./pipework net2 sun 140.252.13.33/27

./pipework net2 svr4 140.252.13.34/27

#添加从slip到bsdi的p2p网络

echo "add p2p from slip to bsdi"

#创建一个peer的两个网卡

ip link add name slipside mtu 1500 type veth peer name bsdiside mtu 1500

#把其中一个塞到slip的网络namespace里面

DOCKERPID1=$(docker inspect '--format=' slip)

ln -s /proc/${DOCKERPID1}/ns/net /var/run/netns/${DOCKERPID1}

ip link set slipside netns ${DOCKERPID1}

#把另一个塞到bsdi的网络的namespace里面

DOCKERPID2=$(docker inspect '--format=' bsdi)

ln -s /proc/${DOCKERPID2}/ns/net /var/run/netns/${DOCKERPID2}

ip link set bsdiside netns ${DOCKERPID2}

#给slip这面的网卡添加IP地址

docker exec -it slip ip addr add 140.252.13.65/27 dev slipside

docker exec -it slip ip link set slipside up

#给bsdi这面的网卡添加IP地址

docker exec -it bsdi ip addr add 140.252.13.66/27 dev bsdiside

docker exec -it bsdi ip link set bsdiside up

#如果我们仔细分析,p2p网络和下面的二层网络不是同一个网络。

#p2p网络的cidr是140.252.13.64/27,而下面的二层网络的cidr是140.252.13.32/27

#所以对于slip来讲,对外访问的默认网关是13.66

docker exec -it slip ip route add default via 140.252.13.66 dev slipside

#而对于bsdi来讲,对外访问的默认网关13.33

docker exec -it bsdi ip route add default via 140.252.13.33 dev eth1

#对于sun来讲,要想访问p2p网络,需要添加下面的路由表

docker exec -it sun ip route add 140.252.13.64/27 via 140.252.13.35 dev eth1

#对于svr4来讲,对外访问的默认网关是13.33

docker exec -it svr4 ip route add default via 140.252.13.33 dev eth1

#对于svr4来讲,要访问p2p网关,需要添加下面的路由表

docker exec -it svr4 ip route add 140.252.13.64/27 via 140.252.13.35 dev eth1

#这个时候,从slip是可以ping的通下面的所有的节点的。

#添加从sun到netb的点对点网络

echo "add p2p from sun to netb"

#创建一个peer的网卡对

ip link add name sunside mtu 1500 type veth peer name netbside mtu 1500

#一面塞到sun的网络namespace里面

DOCKERPID3=$(docker inspect '--format=' sun)

ln -s /proc/${DOCKERPID3}/ns/net /var/run/netns/${DOCKERPID3}

ip link set sunside netns ${DOCKERPID3}

#另一面塞到netb的网络的namespace里面

DOCKERPID4=$(docker inspect '--format=' netb)

ln -s /proc/${DOCKERPID4}/ns/net /var/run/netns/${DOCKERPID4}

ip link set netbside netns ${DOCKERPID4}

#给sun里面的网卡添加地址

docker exec -it sun ip addr add 140.252.1.29/24 dev sunside

docker exec -it sun ip link set sunside up

#在sun里面,对外访问的默认路由是1.4

docker exec -it sun ip route add default via 140.252.1.4 dev sunside

#在netb里面,对外访问的默认路由是1.4

docker exec -it netb ip route add default via 140.252.1.4 dev eth1

#在netb里面,p2p这面可以没有IP地址,但是需要配置路由规则,访问到下面的二层网络

docker exec -it netb ip link set netbside up

docker exec -it netb ip route add 140.252.1.29/32 dev netbside

docker exec -it netb ip route add 140.252.13.32/27 via 140.252.1.29 dev netbside

docker exec -it netb ip route add 140.252.13.64/27 via 140.252.1.29 dev netbside

#对于netb,配置arp proxy

echo "config arp proxy for netb"

#对于netb来讲,不是一个普通的路由器,因为netb两边是同一个二层网络,所以需要配置arp proxy,将同一个二层网络隔离称为两个。

#配置proxy_arp为1

docker exec -it netb bash -c "echo 1 > /proc/sys/net/ipv4/conf/eth1/proxy_arp"

docker exec -it netb bash -c "echo 1 > /proc/sys/net/ipv4/conf/netbside/proxy_arp"

#通过一个脚本proxy-arp脚本设置arp响应

#设置proxy-arp.conf

#eth1 140.252.1.29

#netbside 140.252.1.92

#netbside 140.252.1.32

#netbside 140.252.1.11

#netbside 140.252.1.4

#将配置文件添加到docker里面

docker cp proxy-arp.conf netb:/etc/proxy-arp.conf

docker cp proxy-arp netb:/root/proxy-arp

#在docker里面执行脚本proxy-arp

docker exec -it netb chmod +x /root/proxy-arp

docker exec -it netb /root/proxy-arp start

#配置上面的二层网络里面所有机器的路由

echo "config all routes"

#在aix里面,默认外网访问路由是1.4

docker exec -it aix ip route add default via 140.252.1.4 dev eth1

#在aix里面,可以通过下面的路由访问下面的二层网络

docker exec -it aix ip route add 140.252.13.32/27 via 140.252.1.29 dev eth1

docker exec -it aix ip route add 140.252.13.64/27 via 140.252.1.29 dev eth1

#同理配置solaris

docker exec -it solaris ip route add default via 140.252.1.4 dev eth1

docker exec -it solaris ip route add 140.252.13.32/27 via 140.252.1.29 dev eth1

docker exec -it solaris ip route add 140.252.13.64/27 via 140.252.1.29 dev eth1

#同理配置gemini

docker exec -it gemini ip route add default via 140.252.1.4 dev eth1

docker exec -it gemini ip route add 140.252.13.32/27 via 140.252.1.29 dev eth1

docker exec -it gemini ip route add 140.252.13.64/27 via 140.252.1.29 dev eth1

#通过配置路由可以连接到下面的二层网络

docker exec -it gateway ip route add 140.252.13.32/27 via 140.252.1.29 dev eth1

docker exec -it gateway ip route add 140.252.13.64/27 via 140.252.1.29 dev eth1

#到此为止,上下的二层网络都能相互访问了

#配置外网访问

echo "add public network"

#创建一个peer的网卡对

ip link add name gatewayin mtu 1500 type veth peer name gatewayout mtu 1500

ip addr add 140.252.104.1/24 dev gatewayout

ip link set gatewayout up

#一面塞到gateway的网络的namespace里面

DOCKERPID5=$(docker inspect '--format=' gateway)

ln -s /proc/${DOCKERPID5}/ns/net /var/run/netns/${DOCKERPID5}

ip link set gatewayin netns ${DOCKERPID5}

#给gateway里面的网卡添加地址

docker exec -it gateway ip addr add 140.252.104.2/24 dev gatewayin

docker exec -it gateway ip link set gatewayin up

#在gateway里面,对外访问的默认路由是140.252.104.1/24

docker exec -it gateway ip route add default via 140.252.104.1 dev gatewayin

iptables -t nat -A POSTROUTING -o ${publiceth} -j MASQUERADE

ip route add 140.252.13.32/27 via 140.252.104.2 dev gatewayout

ip route add 140.252.13.64/27 via 140.252.104.2 dev gatewayout

ip route add 140.252.1.0/24 via 140.252.104.2 dev gatewayout

pipework脚本

#!/bin/sh

# This code should (try to) follow Google's Shell Style Guide

# (https://google-styleguide.googlecode.com/svn/trunk/shell.xml)

set -e

case "$1" in

--wait)

WAIT=1

;;

esac

IFNAME=$1

# default value set further down if not set here

CONTAINER_IFNAME=

if [ "$2" = "-i" ]; then

CONTAINER_IFNAME=$3

shift 2

fi

GUESTNAME=$2

IPADDR=$3

MACADDR=$4

case "$MACADDR" in

*@*)

VLAN="${MACADDR#*@}"

VLAN="${VLAN%%@*}"

MACADDR="${MACADDR%%@*}"

;;

*)

VLAN=

;;

esac

[ "$IPADDR" ] || [ "$WAIT" ] || {

echo "Syntax:"

echo "pipework <hostinterface> [-i containerinterface] <guest> <ipaddr>/<subnet>[@default_gateway] [macaddr][@vlan]"

echo "pipework <hostinterface> [-i containerinterface] <guest> dhcp [macaddr][@vlan]"

echo "pipework --wait [-i containerinterface]"

exit 1

}

# Succeed if the given utility is installed. Fail otherwise.

# For explanations about `which` vs `type` vs `command`, see:

# http://stackoverflow.com/questions/592620/check-if-a-program-exists-from-a-bash-script/677212#677212

# (Thanks to @chenhanxiao for pointing this out!)

installed () {

command -v "$1" >/dev/null 2>&1

}

# Google Styleguide says error messages should go to standard error.

warn () {

echo "$@" >&2

}

die () {

status="$1"

shift

warn "$@"

exit "$status"

}

wait_for_container(){

dockername=$@

while true

do

status=`docker inspect $dockername | grep Running | awk -F ':' '{print $2}' | tr -d " :,"`

if [ $status = "true" ]

then

break

else

sleep 1

fi

done

}

wait_for_container $GUESTNAME

# First step: determine type of first argument (bridge, physical interface...),

# Unless "--wait" is set (then skip the whole section)

if [ -z "$WAIT" ]; then

if [ -d "/sys/class/net/$IFNAME" ]

then

if [ -d "/sys/class/net/$IFNAME/bridge" ]; then

IFTYPE=bridge

BRTYPE=linux

elif installed ovs-vsctl && ovs-vsctl list-br|grep -q "^${IFNAME}$"; then

IFTYPE=bridge

BRTYPE=openvswitch

elif [ "$(cat "/sys/class/net/$IFNAME/type")" -eq 32 ]; then # Infiniband IPoIB interface type 32

IFTYPE=ipoib

# The IPoIB kernel module is fussy, set device name to ib0 if not overridden

CONTAINER_IFNAME=${CONTAINER_IFNAME:-ib0}

else IFTYPE=phys

fi

else

case "$IFNAME" in

br*)

IFTYPE=bridge

BRTYPE=linux

;;

ovs*)

if ! installed ovs-vsctl; then

die 1 "Need OVS installed on the system to create an ovs bridge"

fi

IFTYPE=bridge

BRTYPE=openvswitch

;;

*) die 1 "I do not know how to setup interface $IFNAME." ;;

esac

fi

fi

# Set the default container interface name to eth1 if not already set

CONTAINER_IFNAME=${CONTAINER_IFNAME:-eth1}

[ "$WAIT" ] && {

while true; do

# This first method works even without `ip` or `ifconfig` installed,

# but doesn't work on older kernels (e.g. CentOS 6.X). See #128.

grep -q '^1$' "/sys/class/net/$CONTAINER_IFNAME/carrier" && break

# This method hopefully works on those older kernels.

ip link ls dev "$CONTAINER_IFNAME" && break

sleep 1

done > /dev/null 2>&1

exit 0

}

[ "$IFTYPE" = bridge ] && [ "$BRTYPE" = linux ] && [ "$VLAN" ] && {

die 1 "VLAN configuration currently unsupported for Linux bridge."

}

[ "$IFTYPE" = ipoib ] && [ "$MACADDR" ] && {

die 1 "MACADDR configuration unsupported for IPoIB interfaces."

}

# Second step: find the guest (for now, we only support LXC containers)

while read _ mnt fstype options _; do

[ "$fstype" != "cgroup" ] && continue

echo "$options" | grep -qw devices || continue

CGROUPMNT=$mnt

done < /proc/mounts

[ "$CGROUPMNT" ] || {

die 1 "Could not locate cgroup mount point."

}

# Try to find a cgroup matching exactly the provided name.

N=$(find "$CGROUPMNT" -name "$GUESTNAME" | wc -l)

case "$N" in

0)

# If we didn't find anything, try to lookup the container with Docker.

if installed docker; then

RETRIES=3

while [ "$RETRIES" -gt 0 ]; do

DOCKERPID=$(docker inspect --format='' "$GUESTNAME")

[ "$DOCKERPID" != 0 ] && break

sleep 1

RETRIES=$((RETRIES - 1))

done

[ "$DOCKERPID" = 0 ] && {

die 1 "Docker inspect returned invalid PID 0"

}

[ "$DOCKERPID" = "<no value>" ] && {

die 1 "Container $GUESTNAME not found, and unknown to Docker."

}

else

die 1 "Container $GUESTNAME not found, and Docker not installed."

fi

;;

1) true ;;

*) die 1 "Found more than one container matching $GUESTNAME." ;;

esac

if [ "$IPADDR" = "dhcp" ]; then

# Check for first available dhcp client

DHCP_CLIENT_LIST="udhcpc dhcpcd dhclient"

for CLIENT in $DHCP_CLIENT_LIST; do

installed "$CLIENT" && {

DHCP_CLIENT=$CLIENT

break

}

done

[ -z "$DHCP_CLIENT" ] && {

die 1 "You asked for DHCP; but no DHCP client could be found."

}

else

# Check if a subnet mask was provided.

case "$IPADDR" in

*/*) : ;;

*)

warn "The IP address should include a netmask."

die 1 "Maybe you meant $IPADDR/24 ?"

;;

esac

# Check if a gateway address was provided.

case "$IPADDR" in

*@*)

GATEWAY="${IPADDR#*@}" GATEWAY="${GATEWAY%%@*}"

IPADDR="${IPADDR%%@*}"

;;

*)

GATEWAY=

;;

esac

fi

if [ "$DOCKERPID" ]; then

NSPID=$DOCKERPID

else

NSPID=$(head -n 1 "$(find "$CGROUPMNT" -name "$GUESTNAME" | head -n 1)/tasks")

[ "$NSPID" ] || {

die 1 "Could not find a process inside container $GUESTNAME."

}

fi

# Check if an incompatible VLAN device already exists

[ "$IFTYPE" = phys ] && [ "$VLAN" ] && [ -d "/sys/class/net/$IFNAME.VLAN" ] && {

ip -d link show "$IFNAME.$VLAN" | grep -q "vlan.*id $VLAN" || {

die 1 "$IFNAME.VLAN already exists but is not a VLAN device for tag $VLAN"

}

}

[ ! -d /var/run/netns ] && mkdir -p /var/run/netns

rm -f "/var/run/netns/$NSPID"

ln -s "/proc/$NSPID/ns/net" "/var/run/netns/$NSPID"

# Check if we need to create a bridge.

[ "$IFTYPE" = bridge ] && [ ! -d "/sys/class/net/$IFNAME" ] && {

[ "$BRTYPE" = linux ] && {

(ip link add dev "$IFNAME" type bridge > /dev/null 2>&1) || (brctl addbr "$IFNAME")

ip link set "$IFNAME" up

}

[ "$BRTYPE" = openvswitch ] && {

ovs-vsctl add-br "$IFNAME"

}

}

MTU=$(ip link show "$IFNAME" | awk '{print $5}')

# If it's a bridge, we need to create a veth pair

[ "$IFTYPE" = bridge ] && {

LOCAL_IFNAME="v${CONTAINER_IFNAME}pl${NSPID}"

GUEST_IFNAME="v${CONTAINER_IFNAME}pg${NSPID}"

ip link add name "$LOCAL_IFNAME" mtu "$MTU" type veth peer name "$GUEST_IFNAME" mtu "$MTU"

case "$BRTYPE" in

linux)

(ip link set "$LOCAL_IFNAME" master "$IFNAME" > /dev/null 2>&1) || (brctl addif "$IFNAME" "$LOCAL_IFNAME")

;;

openvswitch)

ovs-vsctl add-port "$IFNAME" "$LOCAL_IFNAME" ${VLAN:+tag="$VLAN"}

;;

esac

ip link set "$LOCAL_IFNAME" up

}

# Note: if no container interface name was specified, pipework will default to ib0

# Note: no macvlan subinterface or ethernet bridge can be created against an

# ipoib interface. Infiniband is not ethernet. ipoib is an IP layer for it.

# To provide additional ipoib interfaces to containers use SR-IOV and pipework

# to assign them.

[ "$IFTYPE" = ipoib ] && {

GUEST_IFNAME=$CONTAINER_IFNAME

}

# If it's a physical interface, create a macvlan subinterface

[ "$IFTYPE" = phys ] && {

[ "$VLAN" ] && {

[ ! -d "/sys/class/net/${IFNAME}.${VLAN}" ] && {

ip link add link "$IFNAME" name "$IFNAME.$VLAN" mtu "$MTU" type vlan id "$VLAN"

}

ip link set "$IFNAME" up

IFNAME=$IFNAME.$VLAN

}

GUEST_IFNAME=ph$NSPID$CONTAINER_IFNAME

ip link add link "$IFNAME" dev "$GUEST_IFNAME" mtu "$MTU" type macvlan mode bridge

ip link set "$IFNAME" up

}

ip link set "$GUEST_IFNAME" netns "$NSPID"

ip netns exec "$NSPID" ip link set "$GUEST_IFNAME" name "$CONTAINER_IFNAME"

[ "$MACADDR" ] && ip netns exec "$NSPID" ip link set dev "$CONTAINER_IFNAME" address "$MACADDR"

if [ "$IPADDR" = "dhcp" ]

then

[ "$DHCP_CLIENT" = "udhcpc" ] && ip netns exec "$NSPID" "$DHCP_CLIENT" -qi "$CONTAINER_IFNAME" -x "hostname:$GUESTNAME"

if [ "$DHCP_CLIENT" = "dhclient" ]; then

# kill dhclient after get ip address to prevent device be used after container close

ip netns exec "$NSPID" "$DHCP_CLIENT" -pf "/var/run/dhclient.$NSPID.pid" "$CONTAINER_IFNAME"

kill "$(cat "/var/run/dhclient.$NSPID.pid")"

rm "/var/run/dhclient.$NSPID.pid"

fi

[ "$DHCP_CLIENT" = "dhcpcd" ] && ip netns exec "$NSPID" "$DHCP_CLIENT" -q "$CONTAINER_IFNAME" -h "$GUESTNAME"

else

ip netns exec "$NSPID" ip addr add "$IPADDR" dev "$CONTAINER_IFNAME"

[ "$GATEWAY" ] && {

ip netns exec "$NSPID" ip route delete default >/dev/null 2>&1 && true

}

ip netns exec "$NSPID" ip link set "$CONTAINER_IFNAME" up

[ "$GATEWAY" ] && {

ip netns exec "$NSPID" ip route get "$GATEWAY" >/dev/null 2>&1 || \

ip netns exec "$NSPID" ip route add "$GATEWAY/32" dev "$CONTAINER_IFNAME"

ip netns exec "$NSPID" ip route replace default via "$GATEWAY"

}

fi

# Give our ARP neighbors a nudge about the new interface

if installed arping; then

IPADDR=$(echo "$IPADDR" | cut -d/ -f1)

ip netns exec "$NSPID" arping -c 1 -A -I "$CONTAINER_IFNAME" "$IPADDR" > /dev/null 2>&1 || true

else

echo "Warning: arping not found; interface may not be immediately reachable"

fi

# Remove NSPID to avoid `ip netns` catch it.

rm -f "/var/run/netns/$NSPID"

# vim: set tabstop=2 shiftwidth=2 softtabstop=2 expandtab :

proxy-arp脚本

#! /bin/sh -

#

# proxy-arp Set proxy-arp settings in arp cache

#

# chkconfig: 2345 15 85

# description: using the arp command line utility, populate the arp

# cache with IP addresses for hosts on different media

# which share IP space.

#

# Copyright (c)2002 SecurePipe, Inc. - http://www.securepipe.com/

#

# This program is free software; you can redistribute it and/or modify it

# under the terms of the GNU General Public License as published by the

# Free Software Foundation; either version 2 of the License, or (at your

# option) any later version.

#

# This program is distributed in the hope that it will be useful, but

# WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY

# or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License

# for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program; if not, write to the Free Software Foundation,

# Inc., 59 Temple Place - Suite 330, Boston, MA 02111-1307, USA.

#

# -- written initially during 1998

# 2002-08-14; Martin A. Brown <mabrown@securepipe.com>

# - cleaned up and commented extensively

# - joined the process parsimony bandwagon, and eliminated

# many unnecessary calls to ifconfig and awk

#

gripe () { echo "$@" >&2; }

abort () { gripe "Fatal: $@"; exit 1; }

CONFIG=${CONFIG:-/etc/proxy-arp.conf}

[ -r "$CONFIG" ] || abort $CONFIG is not readable

case "$1" in

start)

# -- create proxy arp settings according to

# table in the config file

#

grep -Ev '^#|^$' $CONFIG | {

while read INTERFACE IPADDR ; do

[ -z "$INTERFACE" -o -z "$IPADDR" ] && continue

arp -s $IPADDR -i $INTERFACE -D $INTERFACE pub

done

}

;;

stop)

# -- clear the cache for any entries in the

# configuration file

#

grep -Ev '^#|^$' /etc/proxy-arp.conf | {

while read INTERFACE IPADDR ; do

[ -z "$INTERFACE" -o -z "$IPADDR" ] && continue

arp -d $IPADDR -i $INTERFACE

done

}

;;

status)

arp -an | grep -i perm

;;

restart)

$0 stop

$0 start

;;

*)

echo "Usage: proxy-arp {start|stop|restart}"

exit 1

esac

exit 0

#

# - end of proxy-arp

proxy-arp.conf脚本

#

# Proxy ARP configuration file

#

# -- This is the proxy-arp configuration file. A sysV init script

# (proxy-arp) reads this configuration file and creates the

# required arp table entries.

#

# Copyright (c)2002 SecurePipe, Inc. - http://www.securepipe.com/

#

# This program is free software; you can redistribute it and/or modify it

# under the terms of the GNU General Public License as published by the

# Free Software Foundation; either version 2 of the License, or (at your

# option) any later version.

#

# This program is distributed in the hope that it will be useful, but

# WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY

# or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License

# for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program; if not, write to the Free Software Foundation,

# Inc., 59 Temple Place - Suite 330, Boston, MA 02111-1307, USA.

#

#

# -- file was created during 1998

# 2002-08-15; Martin A. Brown <mabrown@securepipe.com>

# - format unchanged

# - added comments

#

# -- field descriptions:

# field 1 this field contains the ethernet interface on which

# to advertise reachability of an IP.

# field 2 this field contains the IP address for which to advertise

#

# -- notes

#

# - white space, lines beginning with a comment and blank lines are ignored

#

# -- examples

#

# - each example is commented with an English description of the

# resulting configuration

# - followed by a pseudo shellcode description of how to understand

# what will happen

#

# -- example #0; advertise for 10.10.15.175 on eth1

#

# eth1 10.10.15.175

#

# for any arp request on eth1; do

# if requested address is 10.10.15.175; then

# answer arp request with our ethernet address from eth1 (so

# that the reqeustor sends IP packets to us)

# fi

# done

#

# -- example #1; advertise for 172.30.48.10 on eth0

#

# eth0 172.30.48.10

#

# for any arp request on eth0; do

# if requested address is 172.30.48.10; then

# answer arp request with our ethernet address from eth1 (so

# that the reqeustor sends IP packets to us)

# fi

# done

#

# -- add your own configuration here

eth1 140.252.1.29

netbside 140.252.1.92

netbside 140.252.1.32

netbside 140.252.1.11

netbside 140.252.1.4

# -- end /etc/proxy-arp.conf

#

Dockerfile

docker打包,我们也可以自己打包:

FROM hub.c.163.com/public/ubuntu:14.04

RUN apt-get -y update && apt-get install -y iproute2 iputils-arping net-tools tcpdump curl telnet iputils-tracepath traceroute

RUN mv /usr/sbin/tcpdump /usr/bin/tcpdump

ENTRYPOINT /usr/sbin/sshd -D

tcp/ip测试

查看运行的容器:

docker ps

选中一个容器id进入然后测试

docker exec -it 96202fe3cbbf '/bin/sh'

可以抓包看看:

ip addr

tcpdump

我们可以看一下图中相关的数据,图:

在主机中用docker ps看一下有哪些容器:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4554f90a4781 hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours slip

96202fe3cbbf hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours bsdi

1ea5fcb166eb hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours svr4

4ac3b6f90700 hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours sun

da1c7d500694 hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours netb

03e7ffa2334d hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours gateway

7831e50be541 hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours gemini

875cc818bf7d hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours solaris

c1d46e3f2b98 hub.c.163.com/liuchao110119163/ubuntu:tcpip "/bin/sh -c '/usr/sb…" 4 hours ago Up 4 hours aix

在主机中用ip addr看一下网关:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s25: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 50:7b:9d:62:fe:7c brd ff:ff:ff:ff:ff:ff

inet 192.168.44.137/24 brd 192.168.44.255 scope global noprefixroute dynamic enp0s25

valid_lft 27762sec preferred_lft 27762sec

inet6 fe80::e8b7:c38e:b752:14ec/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: wlp4s0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 30:52:cb:70:9f:23 brd ff:ff:ff:ff:ff:ff

4: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether c2:69:2c:94:85:0c brd ff:ff:ff:ff:ff:ff

7: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:78:f0:6f:0e brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

32: net1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 1a:b1:8b:e9:36:4e brd ff:ff:ff:ff:ff:ff

inet6 fe80::18b1:8bff:fee9:364e/64 scope link

valid_lft forever preferred_lft forever

33: net2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 0e:72:c6:ce:fb:4d brd ff:ff:ff:ff:ff:ff

inet6 fe80::c72:c6ff:fece:fb4d/64 scope link

valid_lft forever preferred_lft forever

35: veth1pl8022@if34: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether 52:cf:45:eb:4a:e4 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::50cf:45ff:feeb:4ae4/64 scope link

valid_lft forever preferred_lft forever

37: veth1pl8107@if36: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether fe:b5:19:ac:69:81 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::fcb5:19ff:feac:6981/64 scope link

valid_lft forever preferred_lft forever

39: veth1pl8290@if38: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether 96:84:53:92:d7:8e brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet6 fe80::9484:53ff:fe92:d78e/64 scope link

valid_lft forever preferred_lft forever

41: veth1pl8381@if40: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether 9a:42:2a:89:d4:ea brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet6 fe80::9842:2aff:fe89:d4ea/64 scope link

valid_lft forever preferred_lft forever

43: veth1pl8499@if42: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether 32:33:4b:9e:0d:91 brd ff:ff:ff:ff:ff:ff link-netnsid 4

inet6 fe80::3033:4bff:fe9e:d91/64 scope link

valid_lft forever preferred_lft forever

45: veth1pl8762@if44: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether d2:a1:14:f1:b2:2a brd ff:ff:ff:ff:ff:ff link-netnsid 5

inet6 fe80::d0a1:14ff:fef1:b22a/64 scope link

valid_lft forever preferred_lft forever

47: veth1pl8591@if46: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether b6:aa:2b:ba:0f:60 brd ff:ff:ff:ff:ff:ff link-netnsid 6

inet6 fe80::b4aa:2bff:feba:f60/64 scope link

valid_lft forever preferred_lft forever

49: veth1pl8678@if48: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether 46:de:11:7e:eb:a0 brd ff:ff:ff:ff:ff:ff link-netnsid 7

inet6 fe80::44de:11ff:fe7e:eba0/64 scope link

valid_lft forever preferred_lft forever

54: gatewayout@if55: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:45:eb:8d:ae:1a brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet 140.252.104.1/24 scope global gatewayout

valid_lft forever preferred_lft forever

inet6 fe80::f845:ebff:fe8d:ae1a/64 scope link

valid_lft forever preferred_lft forever

在主机中用ip r看一下路由:

default via 192.168.44.1 dev enp0s25 proto dhcp metric 100

140.252.1.0/24 via 140.252.104.2 dev gatewayout

140.252.13.32/27 via 140.252.104.2 dev gatewayout

140.252.13.64/27 via 140.252.104.2 dev gatewayout

140.252.104.0/24 dev gatewayout proto kernel scope link src 140.252.104.1

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

192.168.44.0/24 dev enp0s25 proto kernel scope link src 192.168.44.137 metric 100

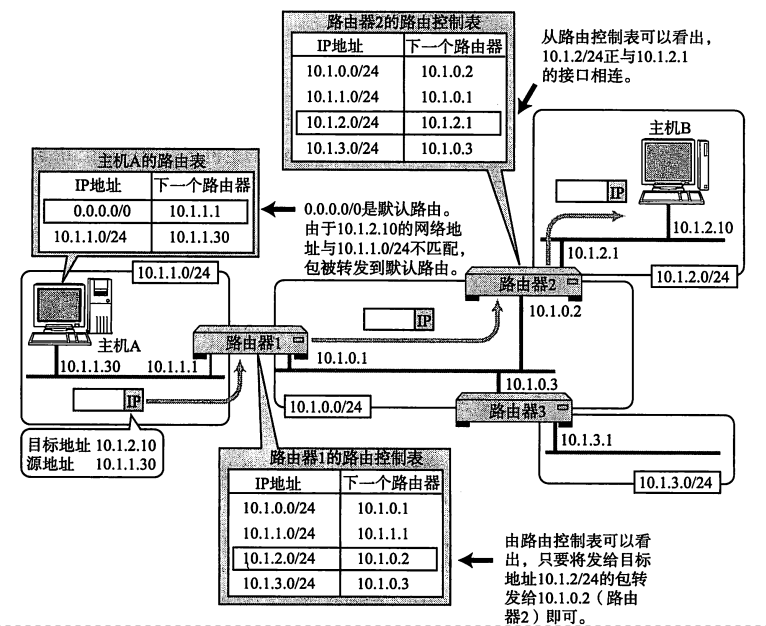

路由就是找下一跳,如上

default via 192.168.44.1 dev enp0s25 就是说 去未匹配的任意地址去 192.168.44.1 ,走该设备上的 enp0s25接口,这是个默认路由;

140.252.1.0/24 via 140.252.104.2 dev gatewayout 是说要去 140.252.1.0/24网段 走 140.252.104.2 ,从该设备上的 gatewayout接口出去;

140.252.13.32/27 via 140.252.104.2 dev gatewayout ,140.252.13.64/27 via 140.252.104.2 dev gatewayout 和上面一样;

140.252.104.0/24 dev gatewayout proto kernel scope link src 140.252.104.1 是说,要去 140.252.104.0/24网段,

从该设备上的 gatewayout接口出去,不过只面向 来源是 140.252.104.1 的连接,现在上面 140.252.1.0/24 via 140.252.104.2 要去140.252.104.2,

依照最长匹配,也可以依照这一条路由规则执行了;

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown 和上面一样;

192.168.44.0/24 dev enp0s25 proto kernel scope link src 192.168.44.137 是说 要去192.168.44.0/24网段 ,走该设备上的enp0s25接口 过去,

不过只面向来源是 192.168.44.137 的连接 ;

也可以通过 route -n 看一下本机路由:

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.44.1 0.0.0.0 UG 100 0 0 enp0s25

140.252.1.0 140.252.104.2 255.255.255.0 UG 0 0 0 gatewayout

140.252.13.32 140.252.104.2 255.255.255.224 UG 0 0 0 gatewayout

140.252.13.64 140.252.104.2 255.255.255.224 UG 0 0 0 gatewayout

140.252.104.0 0.0.0.0 255.255.255.0 U 0 0 0 gatewayout

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.44.0 0.0.0.0 255.255.255.0 U 100 0 0 enp0s25

具体解释

####

# Destination 目标网段或者主机

# Gateway 网关地址

# Genmask 网络掩码

# Flags 路由标志,标记当前网络节点的状态

# U Up表示此路由当前为启动状态

# H Host,表示此网关为一主机

# G Gateway,表示此网关为一路由器

# R Reinstate Route,使用动态路由重新初始化的路由

# D Dynamically,此路由是动态性地写入

# M Modified,此路由是由路由守护程序或导向器动态修改

# ! 表示此路由当前为关闭状态

# Metric 路由距离,到达指定网络所需的中转数

# Ref 路由项引用次数

# Use 此路由项被路由软件查找的次数

# Iface 该路由表项对应的输出接口

通过 ip route show table local 看一下本机路由表:

broadcast 127.0.0.0 dev lo proto kernel scope link src 127.0.0.1

local 127.0.0.0/8 dev lo proto kernel scope host src 127.0.0.1

local 127.0.0.1 dev lo proto kernel scope host src 127.0.0.1

broadcast 127.255.255.255 dev lo proto kernel scope link src 127.0.0.1

broadcast 140.252.104.0 dev gatewayout proto kernel scope link src 140.252.104.1

local 140.252.104.1 dev gatewayout proto kernel scope host src 140.252.104.1

broadcast 140.252.104.255 dev gatewayout proto kernel scope link src 140.252.104.1

broadcast 172.17.0.0 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

local 172.17.0.1 dev docker0 proto kernel scope host src 172.17.0.1

broadcast 172.17.255.255 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

broadcast 192.168.44.0 dev enp0s25 proto kernel scope link src 192.168.44.137

local 192.168.44.137 dev enp0s25 proto kernel scope host src 192.168.44.137

broadcast 192.168.44.255 dev enp0s25 proto kernel scope link src 192.168.44.137

看一个图,一目了然:

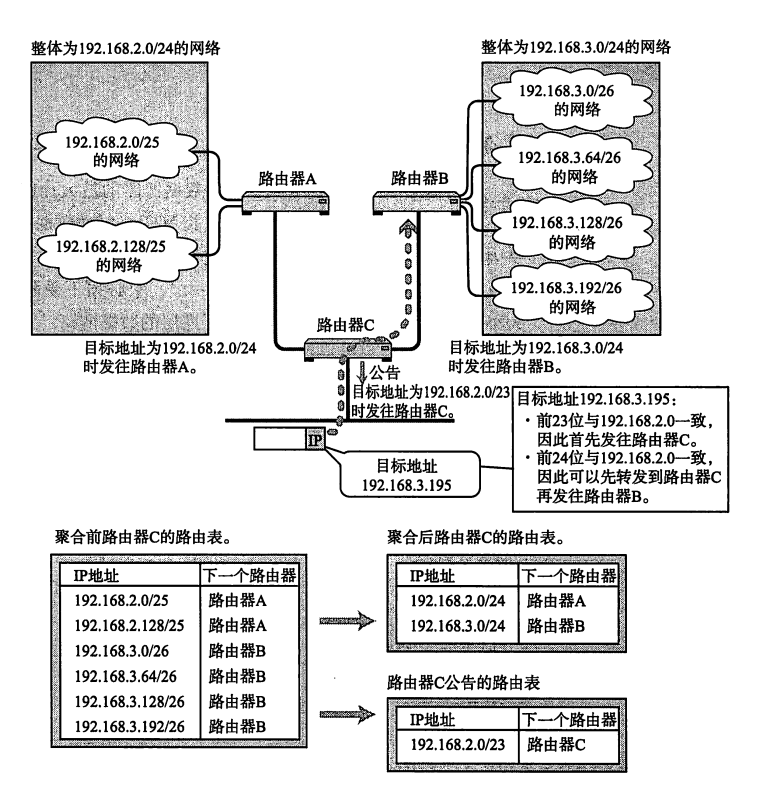

再看个路由汇聚的图,注意 路由C的公告 192.168.2.0/23 ,可以把 192.168.2.0/24 与 192.168.3.0/24 都通过自己这里。

我们到图中具体容器中看一下网关和路由。

slip

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

27: slipside@if26: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether b2:d4:b8:19:33:13 brd ff:ff:ff:ff:ff:ff

inet 140.252.13.65/27 scope global slipside

valid_lft forever preferred_lft forever

路由

default via 140.252.13.66 dev slipside

140.252.13.64/27 dev slipside proto kernel scope link src 140.252.13.65

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 140.252.13.66 0.0.0.0 UG 0 0 0 slipside

140.252.13.64 0.0.0.0 255.255.255.224 U 0 0 0 slipside

路由表

broadcast 127.0.0.0 dev lo proto kernel scope link src 127.0.0.1

local 127.0.0.0/8 dev lo proto kernel scope host src 127.0.0.1

local 127.0.0.1 dev lo proto kernel scope host src 127.0.0.1

broadcast 127.255.255.255 dev lo proto kernel scope link src 127.0.0.1

broadcast 140.252.13.64 dev slipside proto kernel scope link src 140.252.13.65

local 140.252.13.65 dev slipside proto kernel scope host src 140.252.13.65

broadcast 140.252.13.95 dev slipside proto kernel scope link src 140.252.13.65

bsdi

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

20: eth1@if21: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 2a:f8:2a:30:1e:32 brd ff:ff:ff:ff:ff:ff

inet 140.252.13.35/27 scope global eth1

valid_lft forever preferred_lft forever

26: bsdiside@if27: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 1a:89:e1:fb:86:70 brd ff:ff:ff:ff:ff:ff

inet 140.252.13.66/27 scope global bsdiside

valid_lft forever preferred_lft forever

路由

default via 140.252.13.33 dev eth1

140.252.13.32/27 dev eth1 proto kernel scope link src 140.252.13.35

140.252.13.64/27 dev bsdiside proto kernel scope link src 140.252.13.66

sun

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

22: eth1@if23: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether e6:e7:2f:d9:08:96 brd ff:ff:ff:ff:ff:ff

inet 140.252.13.33/27 scope global eth1

valid_lft forever preferred_lft forever

29: sunside@if28: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ce:ce:26:50:42:8f brd ff:ff:ff:ff:ff:ff

inet 140.252.1.29/24 scope global sunside

valid_lft forever preferred_lft forever

路由

default via 140.252.1.4 dev sunside

140.252.1.0/24 dev sunside proto kernel scope link src 140.252.1.29

140.252.13.32/27 dev eth1 proto kernel scope link src 140.252.13.33

140.252.13.64/27 via 140.252.13.35 dev eth1

svr4

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

24: eth1@if25: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 66:58:74:68:43:96 brd ff:ff:ff:ff:ff:ff

inet 140.252.13.34/27 scope global eth1

valid_lft forever preferred_lft forever

路由

default via 140.252.13.33 dev eth1

140.252.13.32/27 dev eth1 proto kernel scope link src 140.252.13.34

140.252.13.64/27 via 140.252.13.35 dev eth1

netb

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

18: eth1@if19: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 32:18:d0:6e:3d:07 brd ff:ff:ff:ff:ff:ff

inet 140.252.1.183/24 scope global eth1

valid_lft forever preferred_lft forever

28: netbside@if29: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether f2:6e:d5:e7:d8:b3 brd ff:ff:ff:ff:ff:ff

路由

default via 140.252.1.4 dev eth1

140.252.1.0/24 dev eth1 proto kernel scope link src 140.252.1.183

140.252.1.29 dev netbside scope link

140.252.13.32/27 via 140.252.1.29 dev netbside

140.252.13.64/27 via 140.252.1.29 dev netbside

aix

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

10: eth1@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 6a:25:80:9d:9f:94 brd ff:ff:ff:ff:ff:ff

inet 140.252.1.92/24 scope global eth1

valid_lft forever preferred_lft forever

路由

default via 140.252.1.4 dev eth1

140.252.1.0/24 dev eth1 proto kernel scope link src 140.252.1.92

140.252.13.32/27 via 140.252.1.29 dev eth1

140.252.13.64/27 via 140.252.1.29 dev eth1

solaris

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

12: eth1@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 3e:1a:58:bc:82:4e brd ff:ff:ff:ff:ff:ff

inet 140.252.1.32/24 scope global eth1

valid_lft forever preferred_lft forever

路由

default via 140.252.1.4 dev eth1

140.252.1.0/24 dev eth1 proto kernel scope link src 140.252.1.32

140.252.13.32/27 via 140.252.1.29 dev eth1

140.252.13.64/27 via 140.252.1.29 dev eth1

gemini

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

14: eth1@if15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 52:c2:60:36:46:ba brd ff:ff:ff:ff:ff:ff

inet 140.252.1.11/24 scope global eth1

valid_lft forever preferred_lft forever

路由

default via 140.252.1.4 dev eth1

140.252.1.0/24 dev eth1 proto kernel scope link src 140.252.1.11

140.252.13.32/27 via 140.252.1.29 dev eth1

140.252.13.64/27 via 140.252.1.29 dev eth1

gateway

网关

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

16: eth1@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 8e:f4:f3:3b:36:3d brd ff:ff:ff:ff:ff:ff

inet 140.252.1.4/24 scope global eth1

valid_lft forever preferred_lft forever

31: gatewayin@if30: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether aa:de:e6:76:e1:84 brd ff:ff:ff:ff:ff:ff

inet 140.252.104.2/24 scope global gatewayin

valid_lft forever preferred_lft forever

路由

default via 140.252.104.1 dev gatewayin

140.252.1.0/24 dev eth1 proto kernel scope link src 140.252.1.4

140.252.13.32/27 via 140.252.1.29 dev eth1

140.252.13.64/27 via 140.252.1.29 dev eth1

140.252.104.0/24 dev gatewayin proto kernel scope link src 140.252.104.2

参考资料

趣谈网络协议 https://github.com/popsuper1982/tcpipillustrated

Get Docker Engine - Community for Ubuntu https://docs.docker.com/install/linux/docker-ce/ubuntu/

Ubuntu Docker 安装 https://www.runoob.com/docker/ubuntu-docker-install.html

Openvswitch介绍 https://www.jianshu.com/p/fe60bfc4eaea

ovs-vsctl使用 https://www.jianshu.com/p/b73a113ab0ed

OpenvSwitch 架构解析与功能实践 https://blog.csdn.net/Jmilk/article/details/86989975#Open_vSwitch__172

网桥工具 bridge-utils 使用 https://blog.csdn.net/yuzx2008/article/details/50432130

Linux arping 命令用法详解-Linux命令大全(手册) https://ipcmen.com/arping

iptables详解(1):iptables概念 http://www.zsythink.net/archives/1199/

linux下IPTABLES配置详解 https://www.cnblogs.com/alimac/p/5848372.html

Linux sysctl 命令用法详解-Linux命令大全(手册) https://ipcmen.com/sysctl

Linux系统中sysctl命令详解 https://www.cnblogs.com/root0/p/10001519.html

ifconfig、route、ip route、ip addr、 ip link 用法 https://blog.51cto.com/13150617/1963833

tcpdump详细教程 https://www.jianshu.com/p/d9162722f189

D.2. ip route 文档 http://linux-ip.net/html/tools-ip-route.html

Linux - 最常用的三个网络命令:route & traceroute & ip https://www.cnblogs.com/anliven/p/6757358.html

图解TCP/IP(第5版) (日)竹下隆史 等